Hi again.

Bilinear interpolation (and many others) uses adjacent pixels for minimization. I think its OK to make indent in 1-2 pixels, just to avoid this problem. But on a high scale it doesn’t help. I dont know, its related to CGE or its common thing. But to set too large indent, in my opinion is wasteful. Maybe it can be solved somehow differently?

I am not 100% sure do I understand your question, as I don’t know what you mean by “indent” (you mention “indent in 1-2 pixels”, “too large indent”).

If you’re looking to improve texture look (when seen from far away / under steep angles):

Bilinear filtering, and mipmaps, and anisotropic filtering are all common techniques (used in CGE and many other engines).

When bilinear filtering is used with mipmaps (which is by default in CGE), it not only averages adjacent pixels. Thanks to mipmaps, it actually averages larger texture areas.

If the texture looks blurry in the distance, you can fight with it by selectively using anisotropic filtering. This should be requested per-texture (because it’s necessary only on textures that may be visible from far away, and at a steep angle). An example how to request it is by https://github.com/castle-engine/castle-engine/blob/master/examples/3d_rendering_processing/anisotropic_filtering/anisotropic_filtering.lpr – it requires a code that adds “TextureProperties” nodes to a scene.

It seems I misunderstood the problem initially (thank you to @eugeneloza for explaining it to me!  ).

).

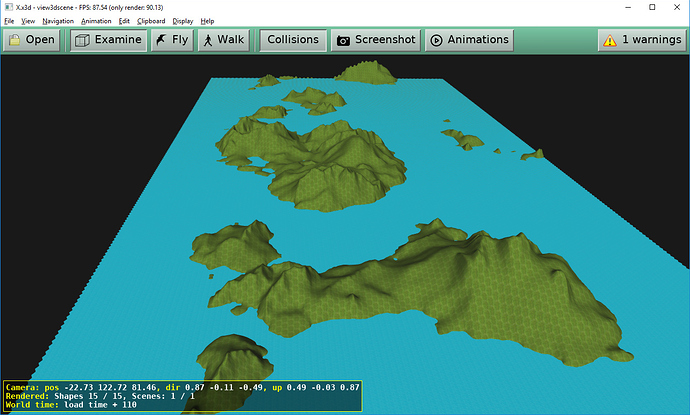

You use a texture atlas. That is, you pack into one texture image an area with water, and an area with grass.

When doing filtering, the water and grass pixels are averaged, and this causes the problems you observe.

The reason of this problem is mipmaps. They mean that larger texture areas are averaged.

-

One straightforward solution is to disable mipmaps. Set

Scene.Attributes.MinificationFilter := minLinear(this means mipmaps are not used; by default we useminLinearMipmapLinear).However, while it would address your problem directly, I cannot really advise this solution. Mipmaps are usually a good idea, esp. if you can view texture from far away, like on your screenshots. If you disable mipmaps, you will get rid of one problem, but introduce another: the textures will look like “noise” when viewed from far away, esp. if you move the camera around.

-

So the proper solution I would suggest is to just not pack textures in this case. You have to be careful with texture packing when texture filtering with mipmaps comes into play. So just use separate image for water, separate for grass.

And this is indeed something that would apply to any rendering engine, CGE or not.

Thanks for answer michalis. And eugeneloza for clarification

OK, separated textures indeed will solve the problem. But how I can use many textures adressed to single shape? (On screenshot above can see just 1 or 2 shapes. If I create shape for every hexagone it will takes a lot of memory. Ohh… even single hexagone may contain parts of few textures then…)

BTW:

- Maybe there is some way to create mipmaps manually?

- Maybe it possible to specify areas of texture that will be interpolated separately?

- Found one more option. Texture array. Array of textures with same size. Maybe its available in CGE?

OK, separated textures indeed will solve the problem. But how I can use many textures adressed to single shape?

Each X3D Shape node must have a separate texture. There are 3D model formats/tools that allow multiple materials (thus multiple textures) on a single shape, but internally these are rendered as multiple separate shapes anyway (i.e. they have to be split for GPU anyway).

E.g. you can create hexagons in Blender, and then you can use multiple materials on a single Blender object, and export it to glTF. Internally this object is split anyway into multiple shapes along the way.

If you construct your level purely by code, then you just have to account for it. Each shape must have a separate texture.

You can use DynamicBatching to speed up rendering of many shapes. (Although there are some conditions where DynamicBatching works, it is best to test it as early as possible whether it helps in your case – by activating DynamicBatching and observing whether the number of shapes reported by MyViewport.Statistics.ToString gets lower.)

… Hm, that being said, the above is true only if you don’t use multi-texturing. Multi-texturing allows to use multiple textures on a single shape, like layers in GIMP or Photoshop. It is possible to write a shader that would just select one layer or the other, depending on some per-vertex attribute. This is a bit complicated, it’s somewhat a “hacky” usage of multi-texturing. So first I would advise you to try and use separate shapes for each texture. But if needed, I can elaborate about this solution ![]() It may be feasible, esp. if you generate all your geometry by code or by hand-crafted X3D files.

It may be feasible, esp. if you generate all your geometry by code or by hand-crafted X3D files.

- Maybe there is some way to create mipmaps manually?

There is, you can provide explicitly created mipmaps in a DDS file ( Texturing component | Castle Game Engine ). You can generate such mipmaps in GIMP and even edit them manually.

But it will not help you in your case. Your manually-crafted mipmaps, if they will contain both grass and water, will anyway cause “averaging” of grass and water colors at some places.

- Maybe it possible to specify areas of texture that will be interpolated separately?

There is no such possibility. The behavior of filtering, including behavior of mipmaps, is something controlled by GPU, and there is no way to “selectively interpolate” some parts.

- Found one more option. Texture array. Array of textures with same size. Maybe its available in CGE?

Not yet (but we may allow it some day). But anyway, it would not solve this problem directly. If anything, it would be similar to the “hacky usage of multi-texturing” I described above, so this is possible without texture arrays already.

Yeah, this app is hexagonal map generator. So, there is nothing except code.

I afraid, that what I started with. It was too heavy (system resource costs). So then I implemented geometry consolidator that creates composite shapes for needed area, like sectors of 40x40 hexagons for now. And it takes really less resources.

I planned to use it for regular purpose. Blending with alpha, bump mapping, smooth transition of texture blocks. Not sure I can combine it with alternative purpose.

Yes, if the dimensions of the texture areas are not a power of two, and mipmaps are created not in binary multiplicity.

Found several articles on this subject. In most, authors recommends to use Texture arrays if its available. Or use other techniques just if first one is not supported.

I also found some tricks that can be used by texture atlas builder (anyway I also build it authomatically) that can make it works for most cases. Will try it first as cheapest way.