Greetings.

In some other topic I promised to give you some test case in regards to slow shadow volume calculation. I have created a simple world with a dozen of instances of a “house”, all are Cashed=true. The model is neither too beautiful nor too complicated. It’s just for the test case and it has already proven to slowdown loading, and even crashing the game.

Before I proceed I’d like to say that all the issues listed below only happens when loading from a binary glb, and x3dv that have textures packed inside. The same models exported to json version of glTF load nearly instantly, also LOD doesn’t freeze the screen, the shadow volumes work without issues, and no crashes.

My tests were done with shadow volumes, and I divided them the into 2 main categories: without LOD, and with it. The LOD uses 2 lazy-made “middle” & “far” models but it’s good enough, the fps keeps being nice typically. However, my goal was to make them un-optimal.

The loading time is reasonable, having 12-20 instances of TScene. For single model it’s about 20 seconds, and for 3 LOD level it’s 2 times more as expected. When playing, I have to look around first - it makes the viewport freeze often for a short time. The models are supposed to be cashed but somehow need the reload of the the 2nd and 3rd level of LOD - after turning 360 degrees everything works quite fine.

However, memory usage is very questionable. Without LOD, having just 1 model takes some 40GB of my memory. Loading x3dv LOD took above 50GB (with peak at 59.4) ram and a whole 11GB vram. I don’t think it’s right.

For a comparison, json glTF with separate textures loaded in few seconds, and used 566MB plus few MBs of vram.

After loading it goes down to “just” 16GB sometimes, but not in every scenario. I understand that many players have this “just” 16GB for the whole system, so it’s still somehow above my expectations. GPU memory stays at full 11GB vram + 14.5GB from shared (part of the above 16).

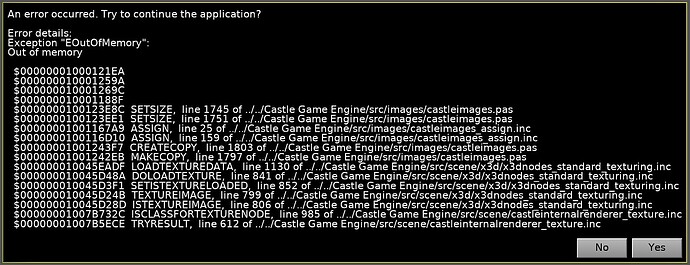

When I loaded it with 20 models in Debug mode, I’ve got a crash with EOutOfMemory while the engine was trying to load some texture/picture. The “lod_far” model doesn’t use normal map or roughness as they’re not seen anyway. I don’t believe that’s the case, because memory doesn’t improve for the 2 other models.

Switching Shadows off on the directional light didn’t improve the memory consumption. Switching CastShadows on the scenes didn’t help either. So the issue isn’t because of shadow volumes.

Turning off textures in RenderOptions also doesn’t help, as the binary *.glb needs to be loaded anyway, but it’s supposed to be loaded once so it shouldn’t consume much memory?

In Release mode, 20 models consumed nearly all my memory but as debugger wasn’t loaded I could allocate up to some 61GB without crash.

Using more than 20 TScene?

One time I had an error dialog saying some stuff about objects’ parent being wrong but I couldn’t take the screenshot. The second run didn’t go any better - the message had funny white rectangles instead of letters, and I ended up rebooting the system - it’s fastest option to solve memory leaks IMHO.

The crash isn’t consistent, as it may happen that memory doesn’t peak above 61GB. Usually I can have 20 TScene and it runs at about 60 fps (my display limit).

I hope what I wrote makes sense, maybe it’s too chaotic, and that it’s helpful. I believe this test case would help to improve some bits and bobs somewhere in the future. It’s may also be of benefit to others, so they know that some file formats can cause serious issues.

The case files, zipped, are 150MB so I uploaded them to Proton Drive